Human-in-the-Loop: Why You Must Design for User Control, Not Full Automation in AI Systems

Posted by deeepakbagada25@gmail.com on October 13, 2025

Discover why Human-in-the-Loop AI design outperforms full automation. Learn critical oversight, approval workflows, and trust-building strategies for AI systems in 2025.

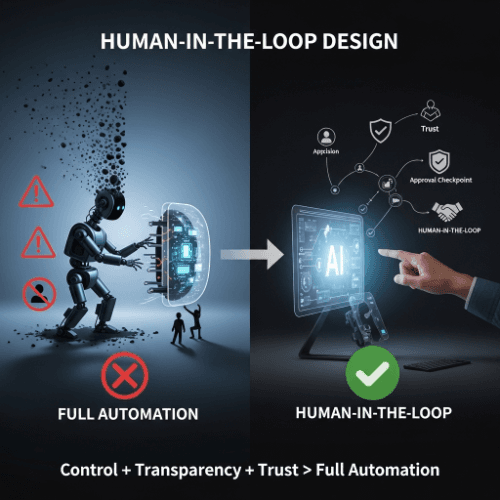

The allure of fully autonomous AI systems is powerful—imagine agents that handle every task from start to finish without human intervention. Yet the most successful AI implementations in 2025 consistently incorporate Human-in-the-Loop (HITL) design principles that maintain user control, enable oversight, and build trust through transparency. Rather than pursuing complete automation, organizations that design thoughtfully for human-AI collaboration achieve superior outcomes, higher adoption rates, and sustainable long-term value.

This comprehensive guide explores why human oversight remains essential, how to design effective HITL workflows, and which decisions should always require human judgment regardless of AI capabilities.

The Full Automation Fallacy

The promise of "set it and forget it" AI automation proves illusory when systems encounter edge cases, make errors with significant consequences, or operate in domains requiring ethical judgment and contextual understanding that AI cannot reliably provide.

Why Full Automation Falls Short

The Complexity Problem: Real-world scenarios contain infinite variations, unexpected situations, and ambiguous contexts that even sophisticated AI models cannot handle reliably without human guidance for edge cases.

The Accountability Gap: When AI systems operate autonomously, determining responsibility for errors, unintended consequences, or harmful outcomes becomes complex, creating legal and ethical challenges organizations cannot ignore.

The Trust Deficit: Users trust systems they can oversee and control more than black-box automation. Full automation without transparency or intervention points creates anxiety and resistance rather than confidence and adoption.

The Learning Limitation: AI systems improve through feedback, but fully autonomous operation prevents humans from identifying improvement opportunities, correcting errors, and teaching systems about nuanced preferences and requirements.

Core Principles of Human-in-the-Loop Design

Effective HITL systems balance automation benefits with human judgment, creating collaborative workflows that leverage AI efficiency while maintaining meaningful human control.

Principle 1: Transparency Over Opacity

Explainable Decision-Making: Systems must clearly communicate why they recommend specific actions, what data informed decisions, and what alternatives were considered, enabling users to understand and trust AI reasoning.

Visible Confidence Levels: AI should indicate confidence in recommendations, flagging uncertain situations where human judgment is particularly valuable and highlighting when automation can proceed safely.

Audit Trail Maintenance: Complete logs of AI decisions, human interventions, and outcomes enable accountability, learning, and continuous improvement while providing documentation for compliance requirements.

Principle 2: Control at Critical Junctures

High-Stakes Decision Points: Actions with significant consequences—financial transactions, legal commitments, permanent deletions, or communications affecting relationships—must require explicit human approval before execution.

Reversibility and Undo: Where possible, enable users to reverse or modify AI actions, creating safety nets that reduce fear of automation errors and encourage experimentation with AI capabilities.

Emergency Override: Provide clear, accessible mechanisms for humans to halt AI operations immediately when unexpected situations arise or systems behave inappropriately.

Principle 3: Progressive Autonomy

Earned Trust Model: AI systems should start with high human oversight and gradually increase autonomy as they demonstrate reliability, enabling users to develop confidence through direct experience.

Customizable Automation Levels: Allow users to adjust automation depth based on comfort, expertise, and context—power users might prefer deeper automation while new users benefit from more touchpoints.

Context-Aware Oversight: Systems should automatically increase human involvement for unfamiliar situations, high-risk operations, or scenarios where past performance indicates higher error probability.

Principle 4: Meaningful Human Work

Eliminate Rubber-Stamping: Don't present human oversight as meaningless approval clicks. Ensure review processes provide sufficient context for informed decisions and highlight items genuinely requiring human judgment.

Respect Human Time: Aggregate approvals sensibly, provide clear prioritization, and streamline workflows to respect human attention while maintaining effective oversight of AI operations.

Enhance Rather Than Replace: Position AI as augmenting human capabilities—doing the heavy lifting while humans provide strategic direction, final judgment, and creative input that machines cannot replicate.

Critical Decisions Requiring Human Judgment

Certain categories of decisions should always involve human oversight regardless of AI sophistication due to ethical, legal, or strategic considerations.

Financial and Legal Commitments

Why Human Oversight: Financial transactions and legal agreements create binding obligations with significant consequences. Humans must verify appropriateness, understand implications, and accept responsibility consciously.

Implementation:

- Require explicit approval before processing payments

- Present clear summaries of contractual terms

- Show cost/benefit analysis for financial decisions

- Enable comparison of alternatives before commitment

Ethical and Moral Choices

Why Human Oversight: AI lacks genuine understanding of ethics, cultural sensitivity, and human values. Decisions affecting people's lives, fairness, or moral dimensions require human judgment informed by empathy and principles.

Implementation:

- Flag decisions with ethical dimensions for human review

- Provide context about affected stakeholders

- Present multiple perspectives on contentious issues

- Enable humans to apply organizational values consciously

Strategic Direction and Priorities

Why Human Oversight: Business strategy, resource allocation, and priority setting require understanding organizational context, competitive dynamics, and long-term vision that AI cannot fully grasp.

Implementation:

- Present AI recommendations with supporting analysis

- Show trade-offs and alternative strategies

- Enable humans to weight factors based on current priorities

- Facilitate strategic discussion rather than dictating choices

Customer-Facing Communications

Why Human Oversight: Communications affecting customer relationships, brand reputation, or sensitive situations require human judgment about tone, timing, and appropriateness that AI cannot reliably provide.

Implementation:

- Review AI-drafted communications before sending

- Flag messages with sensitive content automatically

- Provide edit capabilities with preservation of AI efficiency

- Enable humans to add personal touches and context

Designing Effective HITL Workflows

Practical implementation requires thoughtful workflow design that balances efficiency with effective human oversight.

Approval Workflow Patterns

Pre-Action Approval: AI proposes action, presents rationale and context, waits for explicit human approval before execution—appropriate for high-stakes or irreversible decisions.

Confidence-Threshold Approval: AI executes high-confidence decisions autonomously but requests approval when uncertainty exceeds defined thresholds—balances efficiency with safety.

Post-Action Review: AI executes and notifies humans who can reverse within defined timeframe—appropriate for lower-risk actions where speed matters but oversight remains valuable.

Batched Review: AI accumulates similar decisions for periodic human review—efficient for repetitive decisions where patterns matter more than individual instances.

Context Presentation

Decision Summary: Concise explanation of what AI proposes, why this recommendation was selected, and what it accomplishes, enabling quick understanding without information overload.

Supporting Evidence: Relevant data, analysis, and reasoning that informed the recommendation, allowing humans to validate AI logic and identify potential issues.

Alternative Options: Other choices AI considered with pros/cons, enabling humans to select different approaches if context suggests alternatives are preferable.

Risk Assessment: Clear indication of potential downsides, uncertainties, and failure modes, ensuring humans understand what could go wrong and can evaluate risk appropriately.

Building Trust Through Transparency

User adoption depends on trust, and trust develops through transparency, reliability, and demonstrated value over time.

Transparency Mechanisms

Plain Language Explanations: Avoid technical jargon, explain AI reasoning in terms users understand, and make decision-making processes accessible to non-experts.

Confidence Communication: Clearly indicate when AI is certain versus uncertain, when additional human judgment would be valuable, and when automation can proceed confidently.

Learning Communication: Show how AI improves from feedback, thank users for corrections, and demonstrate that human input makes systems better over time.

Trust-Building Strategies

Start Conservatively: Begin with high oversight, prove reliability in controlled scenarios, and gradually increase autonomy as users develop confidence through positive experiences.

Celebrate Collaboration: Frame AI and humans as partners rather than competitors, highlight complementary strengths, and show how collaboration produces superior outcomes.

Admit Limitations: Be transparent about what AI cannot do well, acknowledge failures openly, and position human judgment as essential rather than automation as aspirational ideal.

Demonstrate Value: Quantify time saved, errors prevented, and efficiency gained to show concrete benefits that justify ongoing AI investment and adoption effort.

Professional HITL System Implementation

SaaSNext , a leading web development, marketing, and AI solutions company based in Junagadh, specializes in implementing Human-in-the-Loop AI systems that balance automation efficiency with meaningful human control. Their expertise encompasses approval workflow design, transparency mechanisms, and trust-building strategies that drive adoption while maintaining accountability.

Whether you need custom HITL systems, AI workflow consulting, or comprehensive human-AI collaboration design, SaaSNext's experienced professionals ensure your AI implementations achieve sustainable success through thoughtful user-centric design.

Measuring HITL Success

Effective measurement ensures HITL systems deliver intended benefits while identifying improvement opportunities.

Key Performance Indicators

Adoption Metrics:

- User activation rate (percentage actively using AI features)

- Feature utilization depth (which capabilities users leverage)

- Sustained usage over time (retention and habit formation)

Efficiency Gains:

- Time saved per task through AI assistance

- Tasks completed per user per time period

- Reduction in errors compared to fully manual operations

Quality Indicators:

- Human override rate (how often users reject AI recommendations)

- Error rate for automated decisions

- User satisfaction scores with AI assistance

Trust Measures:

- Willingness to increase automation levels

- Comfort with reducing oversight for reliable operations

- Net Promoter Score for AI features

Frequently Asked Questions

Q: Doesn't HITL slow down the benefits of automation? A: While HITL adds approval steps, it dramatically increases adoption rates, reduces errors, and builds trust that enables broader AI deployment—net efficiency gains outweigh marginal speed reductions.

Q: How do I determine which decisions need human oversight? A: Consider consequence severity, reversibility, ethical dimensions, legal requirements, and confidence levels—start with more oversight and reduce as reliability proves out.

Q: Won't users just rubber-stamp AI recommendations anyway? A: Proper HITL design provides meaningful context, highlights important decisions, and makes oversight valuable rather than burdensome—avoid presenting trivial approvals.

Q: How can I prevent HITL from creating approval bottlenecks? A: Use confidence-based thresholds, batch similar approvals, prioritize high-impact decisions, and gradually increase automation as trust develops.

Q: What if my users want full automation without oversight? A: Offer progressive autonomy where users can opt into deeper automation after demonstrating they understand systems and their limitations—earned rather than default autonomy.

Q: How do I handle situations where humans consistently override AI? A: High override rates indicate AI needs improvement, requirements need clarification, or humans need better understanding of AI capabilities—use as feedback for system refinement.